Symbol A Practical Guide To Unix For Mac

Introduction I took programming in high school, but I never took to it. This, I strongly believe, is because it wasn't taught right—and teaching it right means starting at the beginning, with unix. The reason for this is three-fold: (1) it gives you a deeper sense of how a high-level computer works (which a glossy front, like Windows, conceals); (2) it's the most natural port of entry into all other programming languages; and (3) it's super-useful in its own right. If you don't know unix and start programming, some things will forever remain hazy and mysterious, even if you can't put your finger on exactly what they are.

If you already know a lot about computers, the point is moot; if you don't, then by all means start your programming education by learning unix! A word about terminology here: I'm in the habit of horrendously confusing and misusing all of the precisely defined words ', ', ', ', ', and '.' Properly speaking, unix is an operating system while linux refers to a closely-related family of unix-based operating systems, which includes commercial and non-commercial distributions. (Unix was not free under its developer, AT&T, which caused the unix-linux schism.) The command line, as says, is. A means of interacting with a computer program where the user issues commands to the program in the form of successive lines of text (command lines). The interface is usually implemented with a command line shell, which is a program that accepts commands as text input and converts commands to appropriate operating system functions. So what I mean when I proselytize for 'unix', is simply that you learn how to punch commands in on the command line.

The terminal is your portal into this world. Here's what my mine looks like. There is a suite of commands to become familiar with— —and, in the course of learning them, you learn about computers. Unix is a foundational piece of a programming education. In terms of bang for the buck, it's also an excellent investment. You can gain powerful abilities by learning just a little. My coworker was fresh out of his introductory CS course, when he was given a small task by our boss.

He wrote a full-fledged program, reading input streams and doing heavy parsing, and then sent an email to the boss that began, 'After 1.5 days of madly absorbing perl syntax, I completed the exercise.' He didn't know how to use the command-line at the time, and now as a joke—and as a monument to the power of the terminal. You can find ringing endorsements for learning the command line from all corners of the internet. For instance, in the excellent course Balaji Srinivasan writes: A command line interface (CLI) is a way to control your computer by typing in commands rather than clicking on buttons in a graphical user interface (GUI). Most computer users are only doing basic things like clicking on links, watching movies, and playing video games, and GUIs are fine for such purposes. But to do industrial strength programming - to analyze large datasets, ship a webapp, or build a software startup - you will need an intimate familiarity with the CLI. Not only can many daily tasks be done more quickly at the command line, many others can only be done at the command line, especially in non-Windows environments.

You can understand this from an information transmission perspective: while a standard keyboard has 50+ keys that can be hit very precisely in quick succession, achieving the same speed in a GUI is impossible as it would require rapidly moving a mouse cursor over a profusion of 50 buttons. It is for this reason that expert computer users prefer command-line and keyboard-driven interfaces.

If you have a PC, abandon all hope, ye who enter here! Just kidding—partially.

None of the native Windows shells, such as or, are unix-like. Instead, they're marked with hideous deformities that betray their ignoble origin as grandchildren of the MS-DOS command interpreter.

If you didn't have a compelling reason until now to quit using PCs, here you are. Typically, my misguided PC friends don't use the command line on their local machines; instead, they have to into some remote server running Linux. (You can do this with an ssh client like, or, but don't ask me how.) On Macintosh you can start practicing on the command line right away without having to install a Linux distribution (the Mac-flavored unix is called ). For both Mac and PC users who want a bona fide Linux command line, one easy way to get it is in the cloud with via the. If you want to go whole hog, you can download and install a Linux distribution—, and are popular choices—but this is asking a lot of non-hardcore-nerds (less drastically, you could boot Linux off of a USB drive or run it in a virtual box). 1 I should admit, you can and should get around this by downloading something like, whose homepage states: 'Get that Linux feeling - on Windows' 2 However, if you're using Mac OS rather than Linux, note that OS does not come with the, which are the gold standard.

The Definitive Guides to Unix, Bash, and the Coreutils Before going any further, it's only fair to plug the authoritative guides which, unsurprisingly, can be found right on your command line: $ man bash $ info coreutils (The $ at the beginning of the line represents the terminal's prompt.) These are good references, but overwhelming to serve as a starting point. There are also great resources online:. although these guides, too, are exponentially more useful once you have a small foundation to build on. The Unix Filestructure All the files and directories (a fancy word for 'folder') on your computer are stored in a hierarchical tree. Picture a tree in your backyard upside-down, so the trunk is on the top.

If you proceed downward, you get to big branches, which then give way to smaller branches, and so on. The trunk contains everything in the sense that everything is connected to it.

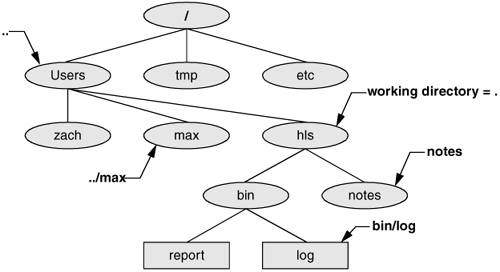

This is the way it looks on the computer, too, and the trunk is called the root directory. In unix it's represented with a slash. /dir1/dir2/dir3/somefile Note that a full path always starts with the root, because the root contains everything. As we'll see below, this won't necessarily be the case if we specify the address in a relative way, with respect to our current location in the filesystem. Let's examine the directory structure on our Macintosh.

We'll go to the root directory and look down just one level with the unix command tree. (If we tried to look at the whole thing, we'd print out every file and directory on our computer!) We have.

Dir1/ This helpfully reminds you that the entity is a directory rather than a file, but on the command line using the more compact dir1 is sufficient. There are a handful of unix commands which behave slightly differently if you leave the trailing slash on, but this sort of extreme pedantry isn't worth worrying about. Where Are You? - Your Path and How to Navigate through the Filesystem When you open up the terminal to browse through your filesystem, run a program, or do anything, you're always somewhere. You start out in the designated home directory when you open up the terminal. The home directory's path is preset by a global variable called HOME.

Again, it's /Users/username on a Mac. As we navigate through the filesystem, there are some conventions. The current working directory (cwd)—whatever directory we happen to be in at the moment—is specified by a dot.

/ To see where we are, we can print working directory: $ pwd To move around, we can change directory: $ cd /some/path By convention, if we leave off the argument and just type: $ cd we will go home. To make directory—i.e., create a new folder—we use: $ mkdir As an example, suppose we're in our home directory, /Users/username, and want to get one back to /Users. We can do this two ways: $ cd /Users or: $ cd. This illustrates the difference between an absolute path and a relative path.

In the former case, we specify the complete address, while in the later we give the address with respect to our cwd. We could even accomplish this with: $ cd /Users/username/.

Or maniacally seesawing back and forth: $ cd /Users/username/./username/. If our primary goal were obfuscation. This distinction between the two ways to specify a path may seem pedantic, but it's not.

Many scripting errors are caused by programs expecting an absolute path and receiving a relative one instead or vice versa. Use relative paths if you can because they're more portable: if the whole directory structure gets moved, they'll still work.

Let's mess around. We know cd with no arguments takes us home, so try the following experiment: $ echo $HOME # print the variable HOME /Users/username $ cd # cd is equivalent to cd $HOME $ pwd # print working directory shows us where we are /Users/username $ unset HOME # unset HOME erases its value $ echo $HOME $ cd /some/path # cd into /some/path $ cd # take us HOME? $ pwd /some/path What happened? We stayed in /some/path rather than returning to /Users/username. There's nothing magical about home—it's merely set by the variable HOME.

More about variables soon! Gently Wading In - The Top 10 Indispensable Unix Commands Now that we've dipped one toe into the water, let's make a list of the 10 most important unix commands in the universe:. pwd. ls. cd.

mkdir. echo. cat. cp. mv. rm. man Every command has a help or manual page, which can be summoned by typing man.

To see more information about pwd, for example, we enter: $ man pwd But pwd isn't particularly interesting and its man page is barely worth reading. A better example is afforded by one of the most fundamental commands of all, ls, which lists the contents of the cwd or of whatever directories we give it as arguments: $ man ls The man pages tend to give TMI (too much information) but the most important point is that commands have flags which usually come in a one-dash-one-letter or two-dashes-one-word flavor. This lists the owner of the file; the group to which he belongs ( staff); the date the file was created; and the file size in human-readable form, which means bytes will be rounded to kilobytes, gigabytes, etc. The column on the left shows permissions. If you'll indulge mild hyperbole, this simple command is already revealing secrets that are well-hidden by the GUI and known only to unix users. In unix there are three spheres of permission— user, group, and other/world—as well as three particular types for each sphere— read, write, and execute. Everyone with an account on the computer is a unique user and, although you may not realize it, can be part of various groups, such as a particular lab within a university or team in a company.

To see yourself and what groups you belong to, try: $ whoami $ groups A string of dashes displays permission. Rwx- rwxrwx- rwxrwxrwx This means, respectively: no permission for anybody; read, write, execute permission for only the user; rwx permission for the user and anyone in the group; and rwx permission for the user, group, and everybody else. Permission is especially important in a shared computing environment. You should internalize now that two of the most common errors in computing stem from the two P words we've already learned: paths and permissions. The command chmod, governs permission.

If you look at the screenshot above, you see a tenth letter prepended to the permission string, e.g. Note that, in contrast to ls -hl, the file sizes are in pure bytes, which makes them a little hard to read. A general point about unix commands: they're often robust. For example, with ls you can use an arbitrary number of arguments and it obeys the convention that an asterisk matches anything (this is known as, and I think of it as the prequel to ). Dir2/.txt dir3/A.html This monstrosity would list anything in the cwd; anything in directory dir1; anything in the directory one above us; anything in directory dir2 that ends with.txt; and anything in directory dir3 that starts with A and ends with.html. You get the point. Suppose I create some nested directories: $ mkdir -p squirrel/mouse/fox (The -p flag is required to make nested directories in a single shot) The directory fox is empty, but if I ask you to list its contents for the sake of argument, how would you do it?

Beginners often do it like this: $ cd squirrel $ cd mouse $ cd fox $ ls But this takes forever. Instead, list the contents from the current working directory, and—crucially—use bash autocompletion, a huge time saver. To use autocomplete, hit the tab key after you type ls. Bash will show you the directories available (if there are many possible directories, start typing the name, sq., before you hit tab).

In short, do it like this: $ ls squirrel/mouse/fox/ # use autocomplete to form this path quickly Single Line Comments in Unix Anything prefaced with a # —that's pound-space—is a comment and will not be executed: $ # This is a comment. $ # If we put the pound sign in front of a command, it won't do anything: $ # ls -hl Suppose you write a line of code on the command line and decided you don't want to execute it. You have two choices. The first is pressing Cntrl-c, which serves as an 'abort mission.'

The second is jumping to the beginning of the line ( Cntrl-a) and adding the pound character. This has an advantage over the first method that the line will be saved in bash history and can thus be retrieved and modified later. In a script, pound-special-character (like #!) is sometimes interpreted , so take note and include a space after # to be safe. The Primacy of Text Files, Text Editors As we get deeper into unix, we'll frequently be using text editors to edit code, and viewing either data or code in text files. When I got my hands on a computer as a child, I remember text editors seemed like the most boring programs in the world (compared to, say, 1992 ). And text files were on the bottom of my food chain. But the years have changed me and now I like nothing better than a clean, unformatted.txt file.

Symbol A Practical Guide To Unix For Mac Os X Users

It's all you need! If you store your data, your code, your correspondence, your book, or almost anything in.txt files with a systematic structure, they can be parsed on the command line to reveal information from many facets. Here's some advice: do all of your text-related work in a good text editor. Open up clunky, and you've unwittingly spoken a demonic incantation and summoned the beast.

Are these the words of a lone lunatic dispensing? No, because on the command line you can count the words in a text file, search it with, input it into a Python program, et cetera. However, a file in Microsoft Word's proprietary and unknown formatting is utterly unusable. Because text editors are extremely important, some people develop deep relationships with them. My co-worker, who is a aficionado, turned to me not long ago and said, 'You know how you should think about editing in Vim? As if you're talking to it.' On the terminal, a ubiquitous and simple editor is.

If you're more advanced, try. Not immune to my co-worker's proselytizing, I've converted to Vim. Although it's sprawling and the learning curve can be harsh—Vim is like a programming language in itself—you can do a zillion things with it.

There's near the end of this article. On the GUI, there are many choices:, (Mac only), (Mac only), etc. Exercise: Let's try making a text file with nano.

Type: $ nano file.txt and make the following three-row two-column file. To emphasize the point again, all the programs in the directories specified by your PATH are all the programs that you can access on the command line by simply typing their names. The PATH is not immutable. You can set it to be anything you want, but in practice you'll want to augment, rather than overwrite, it. By default, it contains directories where unix expects executables, like:. /bin.

/usr/bin. /usr/local/bin Let's say you have just written the command /mydir/newcommand. If you're not going to use the command very often, you can invoke it using its full path every time you need it: $ /mydir/newcommand However, if you're going to be using it frequently, you can just add /mydir to the PATH and then invoke the command by name: $ PATH=/mydir:$PATH # add /mydir to the front of PATH - highest priority $ PATH=$PATH:/mydir # add /mydir to the back of PATH - lowest priority $ newcommand # now invoking newcommand is this easy This is a frequent chore in unix. If you download some new program, you will often find yourself updating the PATH to include the directory containing its binaries. How can we avoid having to do this every time we open the terminal for a new session?

When we learn about.bashrc. If you want to shoot yourself in the foot, you can vaporize the PATH: $ unset PATH # not advisable $ ls # now ls is not found -bash: ls: No such file or directory but this is not advisable, save as a one-time educational experience. 1 In fact, if you want to view the source code (written in ) of ls and other members of the coreutils, you can download it at.

E.g., get and untar the latest version as of this writing: $ wget ftp://ftp.gnu.org/gnu/coreutils/coreutils-8.9.tar.xz $ tar -xvf coreutils-8.9.tar.xz or use git: $ git clone git://git.sv.gnu.org/coreutils Links While we're on the general subject of paths, let's talk about. If you've ever used the Make Alias command on a Macintosh (not to be confused with the unix command alias, ), you've already developed intuition for what a link is. Suppose you have a file in one folder and you want that file to exist in another folder simultaneously. You could copy the file, but that would be wasteful. Moreover, if the file changes, you'll have to re-copy it—a huge ball-ache. Links solve this problem.

A link to a file is a stand-in for the original file, often used to access the original file from an alternate file path. It's not a copy of the file but, rather, points to the file. To make a symbolic link, use the command: $ ln -s /path/to/target/file mylink This produces: mylink - /path/to/target/file in the cwd, as ls -hl will show. Note that removing mylink: $ rm mylink does not affect our original file.

If we give the target (or source) path as the sole argument to ln, the name of the link will be the same as the source file's. So: $ ln -s /path/to/target/file produces: file - /path/to/target/file Links are incredibly useful for all sorts of reasons—the primary one being, as we've already remarked, if you want a file to exist in multiple locations without having to make extraneous, space-consuming copies. You can make links to directories as well as files. Suppose you add a directory to your PATH that has a particular version of a program in it. If you install a newer version, you'll need to change the PATH to include the new directory. However, if you add a link to your PATH and keep the link always pointing to the most up-to-date directory, you won't need to keep fiddling with your PATH.

The scenario could look like this: $ ls -hl myprogram current - version3 version1 version2 version3 (where I'm hiding some of the output in the long listing format.) In contrast to our other examples, the link is in the same directory as the target. Its purpose is to tell us which version, among the many crowding a directory, we should use. Another good practice is putting links in your home directory to folders you often use.

This way, navigating to those folders is easy when you log in. If you make the link: /MYLINK - /some/long/and/complicated/path/to/an/often/used/directory then you need only type: $ cd MYLINK rather than: $ cd /some/long/and/complicated/path/to/an/often/used/directory Links are everywhere, so be glad you've made their acquaintance! What is Scripting? By this point, you should be comfortable using basic utilities like echo, cat, mkdir, cd, and ls. Let's enter a series of commands, creating a directory with an empty file inside it, for no particular reason: $ mkdir tmp $ cd tmp $ pwd /Users/oliver/tmp $ touch myfile.txt # the command touch creates an empty file $ ls myfile.txt $ ls myfile2.txt # purposely execute a command we know will fail ls: cannot access myfile2.txt: No such file or directory What if we want to repeat the exact same sequence of commands 5 minutes later? Massive bombshell—we can save all of these commands in a file! And then run them whenever we like!

Try this: $ nano myscript.sh and write the following: # a first script mkdir tmp cd tmp pwd touch myfile.txt ls ls myfile2.txt Gratuitous screenshot. This file is called a script (.sh is a typical suffix for a shell script), and writing it constitutes our first step into the land of bona fide computer programming. In general usage, a script refers to a small program used to perform a niche task. What we've written is a recipe that says:. create a directory called 'tmp'. go into that directory. print our current path in the file system.

make a new file called 'myfile.txt'. list the contents of the directory we're in. specifically list the file 'myfile2.txt' (which doesn't exist) This script, though silly and useless, teaches us the fundamental fact that all computer programs are ultimately just lists of commands. Let's run our program! Try: $./myscript.sh -bash:./myscript.sh: Permission denied WTF!

It's dysfunctional. What's going on here is that the file permissions are not set properly. In unix, when you create a file, the default permission is not executable.

You can think of this as a brake that's been engaged and must be released before we can go (and do something potentially dangerous). First, let's look at the file permissions: $ ls -hl myscript.sh -rw-r-r- 1 oliver staff 75 Oct 12 11:43 myscript.sh Let's change the permissions with the command chmod and execute the script: $ chmod u+x myscript.sh # add executable(x) permission for the user(u) only $ ls -hl myscript.sh -rwxr-r- 1 oliver staff 75 Oct 12 11:43 myscript.sh $./myscript.sh /Users/oliver/tmp/tmp myfile.txt ls: cannot access myfile2.txt: No such file or directory Not bad. Yes, it did because it's printed stuff out and we see it's created tmp/myfile.txt: $ ls myfile.txt myscript.sh tmp $ ls tmp myfile.txt An important note is that even though there was a cd in our script, if we type: $ pwd /Users/oliver/tmp we see that we're still in the same directory as we were in when we ran the script. Even though the script entered /Users/oliver/tmp/tmp, and did its bidding, we stay in /Users/oliver/tmp. Scripts always work this way—where they go is independent of where we go. If you're wondering why anyone would write such a pointless script, you're right—it would be odd if we had occasion to repeat this combination of commands.

File Suffixes in Unix As we begin to script it's worth following some file naming conventions. We should use common sense suffixes, like:.txt - for text files.html - for html files.sh - for shell scripts.pl - for Perl scripts.py - for Python scripts.cpp - for c code and so on. Adhering to this organizational practice will enable us to quickly scan our files, and make searching for particular file types easier. As we saw above, commands like ls and find are particularly well-suited to use this kind of information. For example, list all text files in the cwd: $ ls.txt List all text files in the cwd and below (i.e., including child directories): $ find.name '.txt' 1 An astute reader noted that, for commands—as opposed to, say, html or text files—using suffixes is not the best practice because it violates the principle of encapsulation. The argument is that a user is neither supposed to know nor care about a program's internal implementation details, which the suffix advertises.

You can imagine a program that starts out as a shell script called mycommand.sh, is upgraded to Python as mycommand.py, and then is rewritten in C for speed, becoming the binary mycommand. What if other programs depend on mycommand? Then each time mycommand's suffix changes they have to be rewritten—an annoyance. Although I make this sloppy mistake in this article, that doesn't excuse you!

Update: There's a subtlety inherent in this argument that I didn't appreciate the first time around. I'm going to jump ahead of the narrative here, so you may want to skip this for now and revist it later. Suppose you have two identical Python scripts. One is called hello.py and one is simply called hi. Both contain the following code: #!/usr/bin/env python def yo: print('hello') In the Python shell, this works: import hello but this doesn't: import hi Traceback (most recent call last): File ', line 1, in ImportError: No module named hi Being able to import scripts in Python is important for all kinds of things, such as making modules, but you can only import something with a.py extension. So how do you get around this if you're not supposed to use file extensions?

The sage answers this question as follows: The best way to handle this revolves around the core question of whether the file should be a command or a library. Libraries have to have the extension, and commands should not, so making a tiny command wrapper that handles parsing options and then calls the API from the other, imported one is correct. To elaborate, this considers a command to be something in your PATH, while a library—which could be a runnable script—is not. So, in this example, hello.py would stay the same, as a library not in your PATH: #!/usr/bin/env python def yo: print('hello') hi, a command in your PATH, would look like this: #!/usr/bin/env python import hello hello.yo Hat tip: Alex The Shebang We've left out one important detail about scripting. How does unix know we want to run a bash script, as opposed to, say, a Perl or Python script?

There are two ways to do it. We'll illustrate with two simple scripts, a bash script and a Perl script: $ cat myscript1.sh # a bash script echo 'hello kitty' $ cat myscript1.pl # a Perl script print 'hello kitty n'; The first way to tell unix which program to use to interpret the script is simply to say so on the command line. Chmod entity-permissiontype E.g.: $ chmod u+x myfile # make executable for you $ chmod g+rxw myfile # add read write execute permissions for the group $ chmod go-wx myfile # remove write execute permissions for the group # and for everyone else (excluding you, the user) You can also use a for 'all of the above', as in: $ chmod a-rwx myfile # remove all permissions for you, the group, # and the rest of the world If you find the above syntax cumbersome, there's a numerical shorthand you can use with chmod. The only two I have memorized are 777 and 755: $ chmod 777 myfile # grant all permissions (rwxrwxrwx) $ chmod 755 myfile # reserve write access for the user, # but grant all other permissions (rwxr-xr-x) Read more about the numeric code. In general, it's a good practice to allow your files to be writable by you alone, unless you have a compelling reason to share access to them.

Ssh In addition to chmod, there's another command it would be remiss not to mention. For many people, the first time they need to go to the command line, rather than the GUI, is to use the protocol. Suppose you want to use a computer, but it's not the computer that's in front of you.

It's a different computer in some other location—say, at your university, your company, or on the. Is the command that allows you to log into a computer remotely over the network. Once you've sshed into a computer, you're in its shell and can run commands on it just as if it were your personal laptop. To ssh, you need to know the address of the host computer you want to log into, your user name on that computer, and the password. The basic syntax is. Ssh username@host For example: $ ssh username@myhost.university.edu If you're trying to ssh into a private computer and don't know the hostname, use its IP address ( username@IP-address).

Ssh also allows you to run a command on the remote server without logging in. For instance, to list of the contents of your remote computer's home directory, you could run: $ ssh username@myhost.university.edu 'ls -hl' Cool, eh?

Moreover, if you have ssh access to a machine, you can copy files to or from it with the utility —a great way to move data without an external hard drive. Settings SSH Keys Add SSH Key on GitHub (read the ). If this is your first encounter with ssh, you'd be surprised how much of the work of the world is done by ssh. It's worth reading the extensive man page, which gets into matters of computer security and cryptography.

1 The host also has to enable ssh access. On Macintosh, for example, it's disabled by default, but you can turn it on, as instructed. For Ubuntu, check out. 2 As you get deeper into the game, tracking your scripts and keeping a single, stable version of them becomes crucial., a vast subject for, is the neat solution to this problem and the industry standard for version control. (Image credit: ) Proper output goes into stdout while errors go into stderr. The syntax for saving stderr in unix is 2 as in: $ # save the output into out.txt and the error into err.txt $./myscript.sh out.txt 2 err.txt $ cat out.txt /Users/oliver/tmp/tmp myfile.txt $ cat err.txt mkdir: cannot create directory ‘tmp’: File exists ls: cannot access myfile2.txt: No such file or directory When you think about it, the fact that output and error are separated is supremely useful. At work, sometimes we parallelize heavily and run 1000 instances of a script.

For each instance, the error and output are saved separately. The 758 th job, for example, might look like this./myjob -instance 758 out758.o 2 out758.e (I'm in the habit of using the suffixes.o for output and.e for error.) With this technique we can quickly scan through all 1000.e files and check if their size is 0. If it is, we know there was no error; if not, we can re-run the failed jobs. Some programs are in the habit of echoing run statistics or other information to stderr.

This is an unfortunate practice because it muddies the water and, as in the example above, would make it hard to tell if there was an actual error. Output vs error is a distinction that many programming languages make. For example, in C writing to stdout and stderr is like this: cout # save stdout to (plain old also works) 2 # save stderr to as in: $./myscript.sh 1 out.o 2 out.e $./myscript.sh out.o 2 out.e # these two lines are identical What if we want to choose where things will be printed from within our script? This is usually employed to tell the user whether or not the command successfully executed.

By convention, successful execution returns 0. For example: $ echo joe joe $ echo $? # query exit code of previous command 0 Let's see how the exit code can be useful. We'll make a script, testexitcode.sh, such that: $ cat testexitcode.sh #!/bin/bash sleep 10 This script just pauses for 10 seconds. First, we'll let it run and then we'll interrupt it using Cntrl-c: $./testexitcode.sh; # let it run $ echo $?

0 $./testexitcode.sh; # interrupt it ^C $ echo $? 130 The non-zero exit code tells us that it's failed. Now we'll try the same thing with an if statement: $./testexitcode.sh $ if $? 0 ; then echo 'program succeeded'; else echo 'program failed'; fi program succeeded $./testexitcode.sh; ^C $ if $?

0 ; then echo 'program succeeded'; else echo 'program failed'; fi program failed In research, you might run hundreds of command-line programs in parallel. For each instance, there are two key questions: (1) Did it finish?

(2) Did it run without error? Checking the exit status is the way to address the second point. You should always check the program you're running to find information about its exit code, since some use different conventions. Read The Linux Documentation Project's discussion of exit status. Question: What's going on here?

$ if echo joe; then echo joe; fi joe joe This is yet another example of bash allowing you to stretch syntax like silly putty. In this code snippet. Echo joe is run, and its successful execution passes a true return code to the if statement. So, the two joes we see echoed to the console are from the statement to be evaluated and the statement inside the conditional. We can also invert this formula, doing something if our command fails: $ outputdir=nonexistentdir # set output dir equal to a nonexistent dir $ if!

Cd $outputdir; then echo 'couldnt cd into output dir'; fi -bash: pushd: nonexistentdir: No such file or directory couldnt cd into output dir $ mkdir existentdir # make a test directory $ outputdir=existentdir $ if! Cd $outputdir; then echo 'couldnt cd into output dir'; fi $ # no error - now we're in the directory existentdir Did you follow that?

Means logical NOT in unix.) The idea is, we try to cd but, if it's unsuccessful, we echo an error message. This is a particularly useful line to include in a script. If the user gives an output directory as an argument and the directory doesn't exist, we exit. If it does exist, we cd into it and it's business as usual. While condition; do.; done I use while loops much less than for loops, but here's an example: $ x=1; while ((x.

Ls -al or to list it explicitly by name. This is useful for files that you generally want to keep hidden from the user or discourage tinkering with. Many programs, such as bash, and, are highly configurable. Each uses dotfiles to let the user add functionality, change options, switch key bindings, etc. For example, here are some of the dotfiles files each program employs:. bash -.bashrc.

vim -.vimrc. git -.gitconfig The most famous dotfile in my circle is.bashrc which resides in HOME and configures your bash. Actually, let me retract that: let's say.bashprofile instead of.bashrc (read about the difference ).

In any case, the idea is that this dotfile gets executed as soon as you open up the terminal and start a new session. It is therefore ideal for setting your PATH and other variables, adding functions , creating aliases , and doing any other setup related chore. For example, suppose you download a new program into /some/path/to/prog and you want to add it to your PATH. Then in your.bashprofile you'd add: export PATH=/some/path/to/prog:$PATH Recalling how export works, this will allow any programs we run on the command line to have access to our amended PATH. Note that we're adding this to the front of our PATH (so, if the program exists in our PATH already, the existing copy will be superseded). Here's an example snippet of my setup file: PATH=/apps/python/2.7.6/bin:$PATH # use this version of Python PATH=/apps/R/3.1.2/bin:$PATH # use this version of R PATH=/apps/gcc/4.6.0/bin/:$PATH # use this version of gcc export PATH There is much ado about.bashrc (read.bashprofile) and it inspired one of the greatest unix blog-post titles of all time: —although this blogger is only playing with his prompt, as it were.

As you go on in unix and add things to your.bashprofile, it will evolve into a kind of fingerprint, optimizing bash in your own unique way (and potentially making it difficult for others to use). If you have multiple computers, you'll want to recycle much of your program configurations on all of them. My co-worker uses a nice system I've adopted where the local and global aspects of setup are separated. For example, if you wanted to use certain aliases across all your computers, you'd put them in a global settings file. However, changes to your PATH might be different on different machines, so you'd store this in a local settings file. Then any time you change computers you can simply copy the global files and get your familiar setup, saving lots of work.

A convenient way to accomplish this goal of a unified shell environment across all the systems you work on is to put your dotfiles on a server, like or, you can access from anywhere. This is exactly what I've done and you can. Here's a sketch of how this idea works: in HOME make a.dotfiles/bash directory and populate it with your setup files, using a suffix of either local or share: $ ls -1.dotfiles/bash/ bashaliaseslocal bashaliasesshare bashfunctionsshare bashinirunlocal bashpathslocal bashsettingslocal bashsettingsshare bashwelcomelocal bashwelcomeshare When.bashprofile is called at the startup of your session, it sources all these files.

Comments are closed.